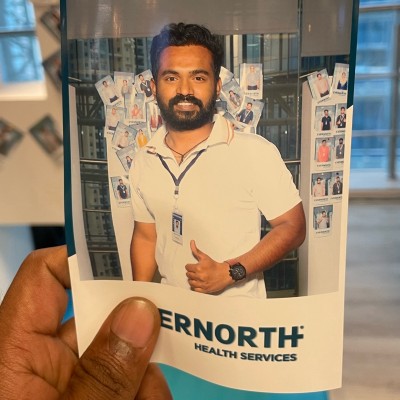

Soumith Beldha

Experienced Data Engineer with extensive experience in developing data pipelines and frameworks that ingest, process, analyze, and store data.

In my more than 6 years of experience on the data side, did various ETL development, Migration, and Production Support Projects on Batch Data, Cloud & Bigdata. Have worked and developed Data lakehouse, Databases, Data Marts, and Data lakes to make them scalable & fault-tolerant as per requirements.

- Role

Data & Unix Engineer

- Years of Experience

6 years

Skillsets

- SQL - 6.5 Years

- Python - 4 Years

- AWS - 3 Years

- Algorithms

- Azure

- Distributed Systems

- ELT

- ETL

- Hive

- PySpark

- Spark

- Data Lakehouse

- Data Lakes

- Data Warehouses

- data marts

Professional Summary

6Years

- Jan, 2024 - Present2 yr

Data Engineer

Koantek - Sep, 2022 - Aug, 20241 yr 11 months

Data Engineer

Life TCS - Aug, 2021 - Sep, 20221 yr 1 month

Data Engineer

Infosys - Feb, 2018 - Aug, 20213 yr 6 months

Database and UNIX Developer

Capgemini

Applications & Tools Known

Redshift

EMR

S3

EC2

IAM

Glue

Lambda

ADLS

Databricks

.png)

Apache Spark

.png)

Informatica

MySQL

Snowflake

PostgreSQL

OLAP

OLTP

NoSQL

Airflow

Bitbucket

.png)

Jenkins

Git

Putty

Perforce

Work History

6Years

Data Engineer

KoantekJan, 2024 - Present2 yr

Implemented an end-to-end pipeline in the Databricks Lakehouse to help businesses derive key KPIs. Developed a scalable, reliable, and easily maintainable data ingestion pipeline that loads data from Azure ADLS and Azure MI SQL instances, accommodating both historical and incremental loads. Designed a data model with various fact, dimension, and reference tables and built the pipeline using Databricks medallion architecture. Utilized Databricks Workflows for scheduling and managing the pipeline. Created a pipeline that ingests data from MongoDB and MySQL, transforming the data to generate final analytical views, including a streaming use-case. Developed several dashboards based on these views using the Databricks Dashboards API. Scheduled ingestion and transformation jobs using Databricks Workflows and integrated them with an API to trigger the workflows.

Data Engineer

Life TCSSep, 2022 - Aug, 20241 yr 11 months

Developed robust end-to-end ETL pipelines on AWS capable of backfilling, reloading, and are scalable, maintainable, and dynamic enough to be reused by multiple source systems. Developed a centralized Data lake in S3 that handles data from different sources in different formats, cleans and converts data into a columnar format for downstream processing. After the Data ingestion process, we have a metadata-driven governance & Data Quality layer where transient EMRs with Spark help process the data lake, joins various normalized tables to form denormalized domains. From this GDQ layer, data is moved to an individual data warehouse in Redshift capable of processing data in parallel from multiple sources using spectrum and stored procedures and warehouse data. The complete project flow is orchestrated with end-to-end pipelines using Apache airflow.

Data Engineer

InfosysAug, 2021 - Sep, 20221 yr 1 month

Migrated Finance Datamart from Azure synapse to AWS Redshift. Created stored procedures that are dynamic enough to perform type-2 load processing for multiple domains and source systems concurrently. Built controls in place for reconciliation between each hop to ensure data integrity. Sorted serializable isolation violations when tens of pipeline instances were running concurrently using transaction controls and table locks without causing deadlock issues. Reduced processing time of stored procedures from one hour to 10 minutes by optimizing partition sizing and using appropriate distribution and sort keys.

Database and UNIX Developer

CapgeminiFeb, 2018 - Aug, 20213 yr 6 months

Developing and Modifying the Sybase objects like Stored Procedures, Tables, Views, Indexes, schema, etc. in Development Environment. Preparing the test cases and performing Unit testing on the code developed and the regression testing of the process flow in Development Environment. Also performed the Migration of Unix from Aurora environment to Aquilon. Maintaining the Unix Environments of all the Non-Prod Environments like NFS paths, AFS paths, Unix User accessibility, Host performance, Data Purging, etc. Supporting the Production Maintenance team in case of deployments or Production issues. Interacting with the Clients for knowing and understanding the new developments and business requirements.

Major Projects

3Projects

DataLakehouse Implementation: IndiaLends

Jan, 2024 - Present2 yr

Implemented an end-to-end pipeline in the Databricks Lakehouse to help businesses derive key KPIs. Developed a scalable, reliable, and easily maintainable data ingestion pipeline that loads data from Azure ADLS and Azure MI SQL instances, accommodating both historical and incremental loads based on requirements. Designed a data model with various fact, dimension, and reference tables to ensure robust data flow, and built the pipeline using the Databricks medallion architecture. Utilized Databricks Workflows for scheduling and managing the pipeline.

DataLakehouse Implementation: Uniqus

Jan, 2024 - Present2 yr

Developed a Lakehouse as a proof of concept to demonstrate the usage, functionalities, efficiency, advantages, and various offerings of Databricks. Created a pipeline that ingests data from MongoDB and MySQL, transforming the data to generate final analytical views, including a streaming use-case. Developed several dashboards based on these views using the Databricks Dashboards API. Scheduled ingestion and transformation jobs using Databricks Workflows and integrated them with an API to trigger the workflows.

Snowflake to Databricks Migration: Funplus & CIQ

Jan, 2024 - Present2 yr

Converted all Snowflake DDL statements to the corresponding Databricks SQL statements. Prepared PySpark/Python scripts to establish data lineage, load relevant tables according to the lineage, and implement watermarking or track the load status of each table. After completing the historical load, created scripts to validate all the migrated data. Built a pipeline to ingest incremental data, and prepared and scheduled jobs to run these pipelines.

Education

Bachelors Degree of Computer Science Engineering

Lovely Professional University, Punjab (2017)

Certifications

Databricks certified associate data engineer.

Aws certified cloud practitioner.

Oracle 11g certified