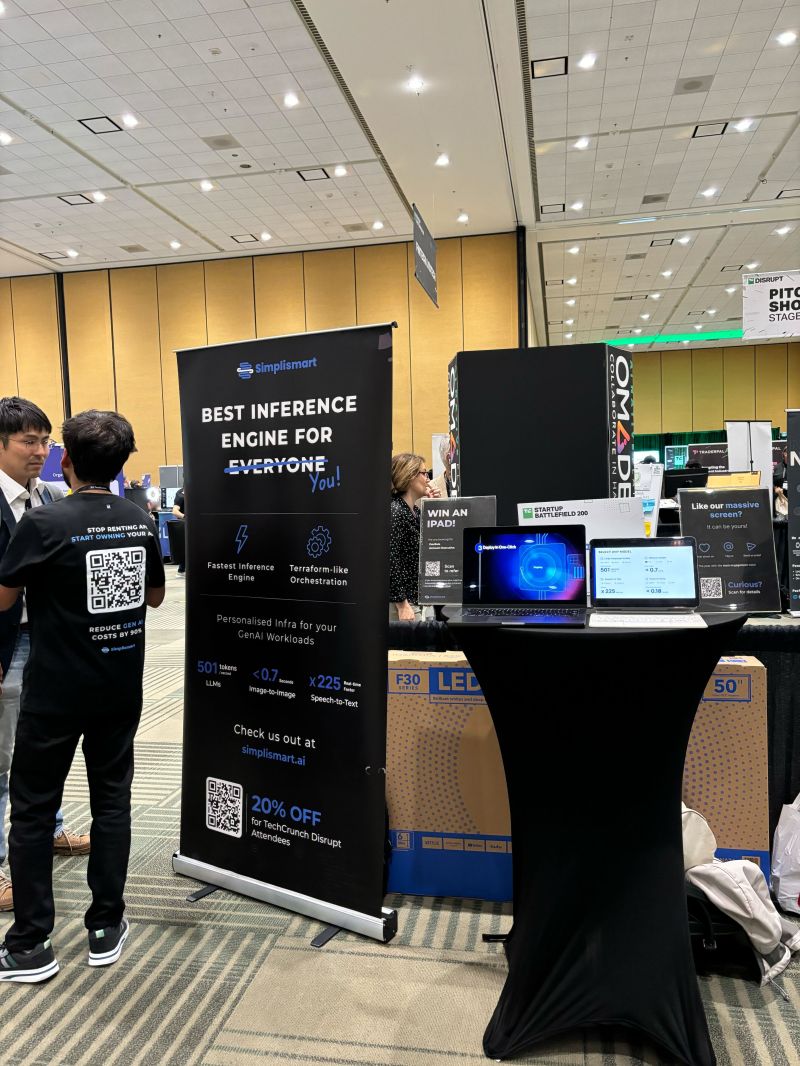

Simplismart

- Founded in

2022

- Team Size

25

- Company Industry

Software Development

- Headquarters

San Francisco

About us

Fastest inference for generative AI workloads. Simplified orchestration via a declarative language similar to terraform. Deploy any open-source model and take advantage of Simplismart’s optimised serving. With a growing quantum of workloads, one size does not fit all; use our building blocks to personalise an inference engine for your needs.

***API vs In-house***

Renting AI via third-party APIs has apparent downsides: data security, rate limits, unreliable performance, and inflated cost. Every company has different inferencing needs: *One size does not fit all.* Businesses need control to manage their cost <> performance tradeoffs. Hence, the movement towards open-source usage: businesses prefer small niche models trained on relevant datasets over large generalist models that do not justify ROI.

**Need for MLOps platform***

Deploying large models comes with its hurdles: access to compute, model optimisation, scaling infrastructure, CI/CD pipelines, and cost efficiency, all requiring highly skilled machine learning engineers. We need a tool to support this advent towards generative AI, as we had tools to transition to cloud and mobile. MLOps platforms simplify orchestration workflows for in-house deployment cycles. Two off-the-shelf solutions readily available:

1. Orchestration platforms with model serving layer: *do not offer optimised performance for all models, limiting user’s ability to squeeze performance*

2. GenAI Cloud Platforms: *GPU brokers offering no control over cost*

Enterprises need control. Simplismart’s MLOps platform provides them with building blocks to prepare for the necessary inference. The fastest inference engine allows businesses to unlock and run each model at performant speed. The inference engine has been optimised at three levels: the model-serving layer, infrastructure layer, and a model-GPU-chip interaction layer, while also enhanced with a known model compilation technique.

- Funding Round/Series

Seed Round

- Funding Amount

$ 8

- Investors

Accel and Anicut Capital

Culture

Beliefs That Shape Our Work and Vision

Simplismart lives and breathes our core beliefs and vision. They are what define us and what we expect from everyone on the team.

Take Your Work Seriously, not Yourself

Why would you want to work at Simplismart

Beyond the salary and benefits on offer, Simplismart will give you an opportunity to explore MLOps and GenAI and solve real problems.

Backed by Marquee Investors

Our investors include Accel, Titan Capital, Shastra VC and other marquee angels. We have raised over $8M to date.

Work on Deep ML and GenAI problems

Simplismart isn't just an API or GPU provider. We innovate across the ML stack and are a true infrastructure company.

Enterprise Clients from around the world

Solve real problems for some of the largest companies across the world. Your contributions will have real-world impact.

Work with Industry Experts

Our team is stacked with folks from some of the largest and most innovative companies. Work with alumni from Google, Oracle, Amazon, Microsoft and other titans.

Stay on the frontier of the AI transformation

The ML landscape is ever-evolving, and at Simplismart you will be riding this wave, deploying optimised solutions as new models and paradigms emerge.